How can explainability be integrated into AI-driven system interaction, and how does it influence user trust, understanding, and control in dynamic OS environments?

PhAI

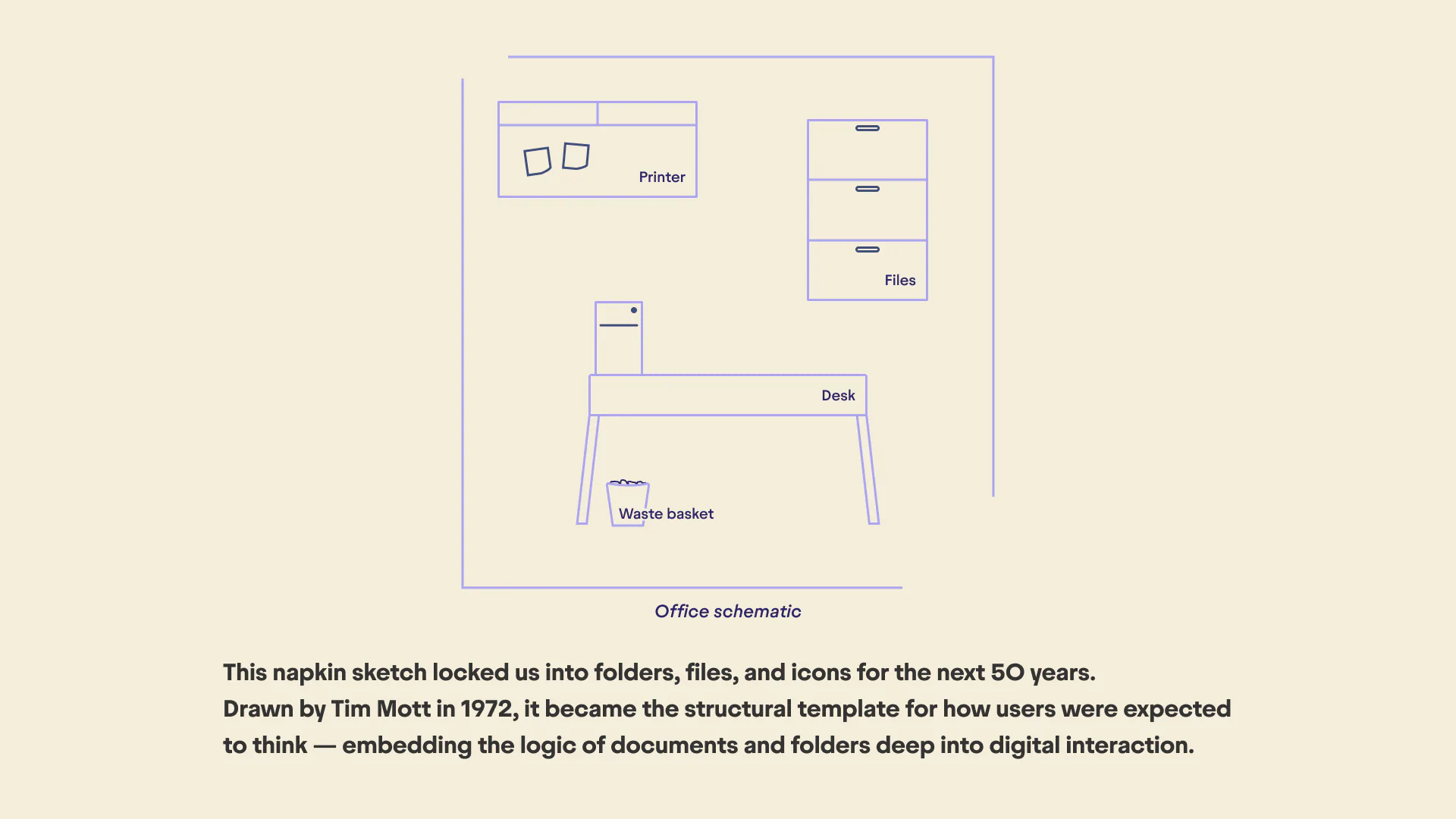

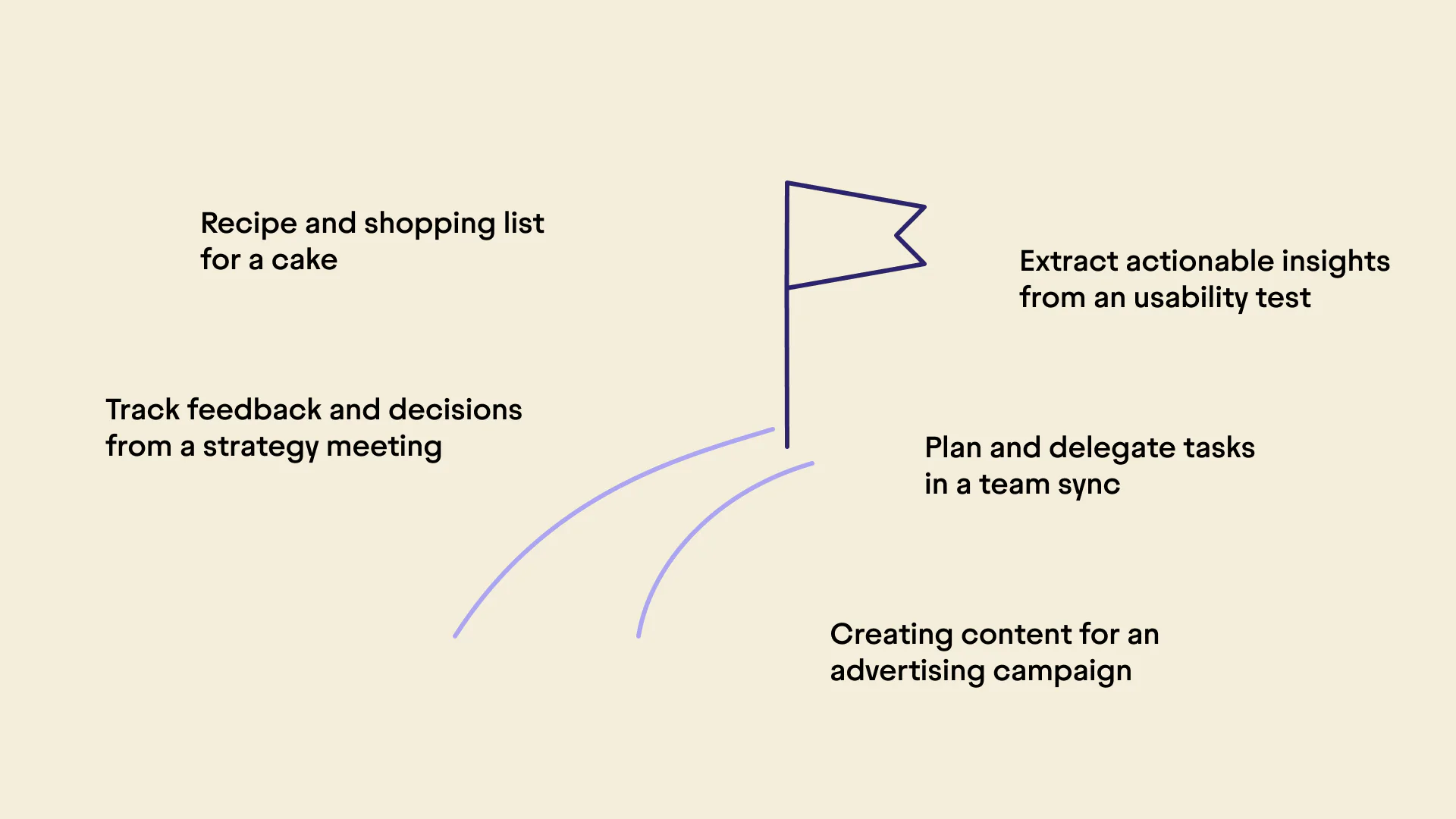

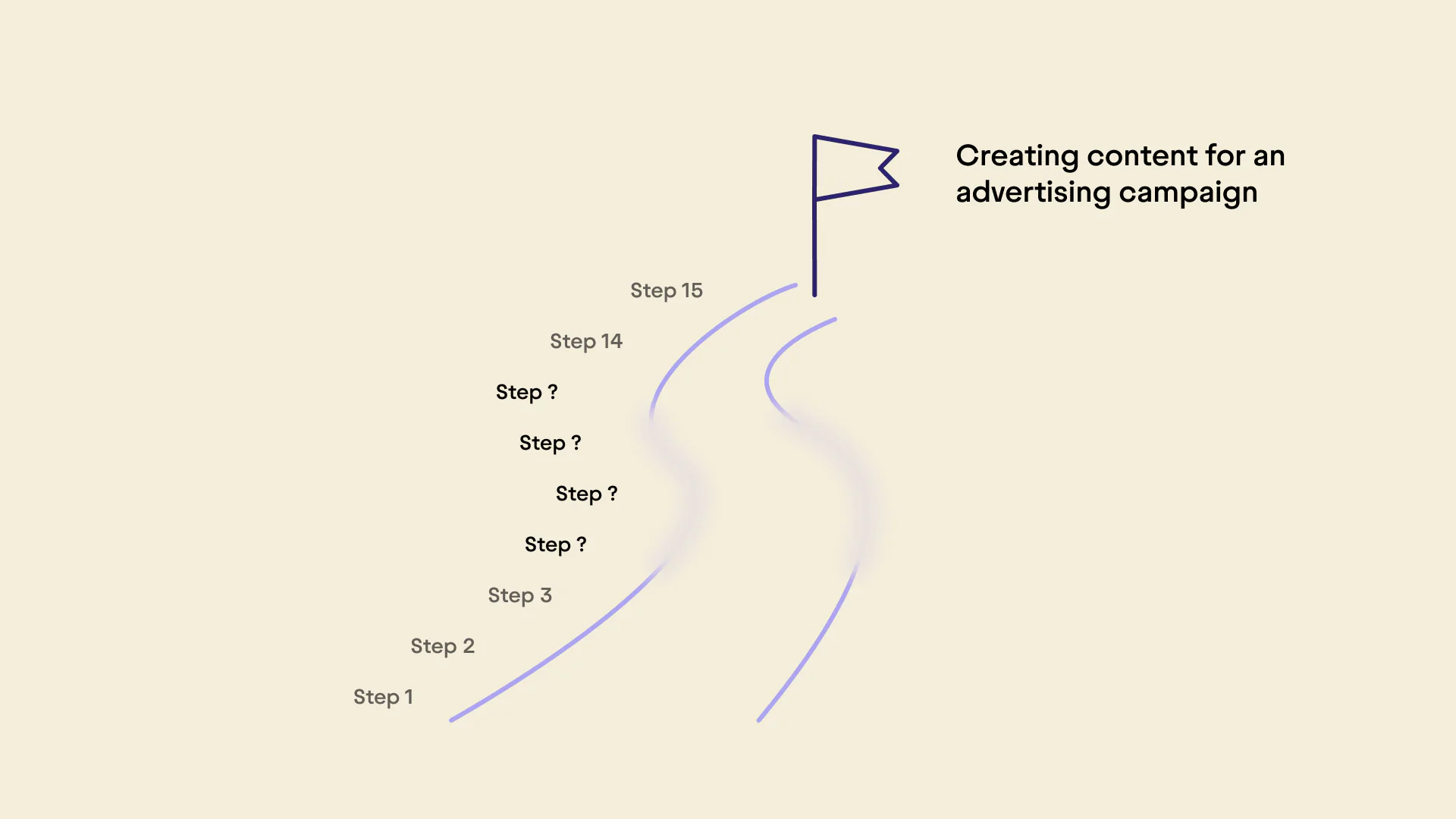

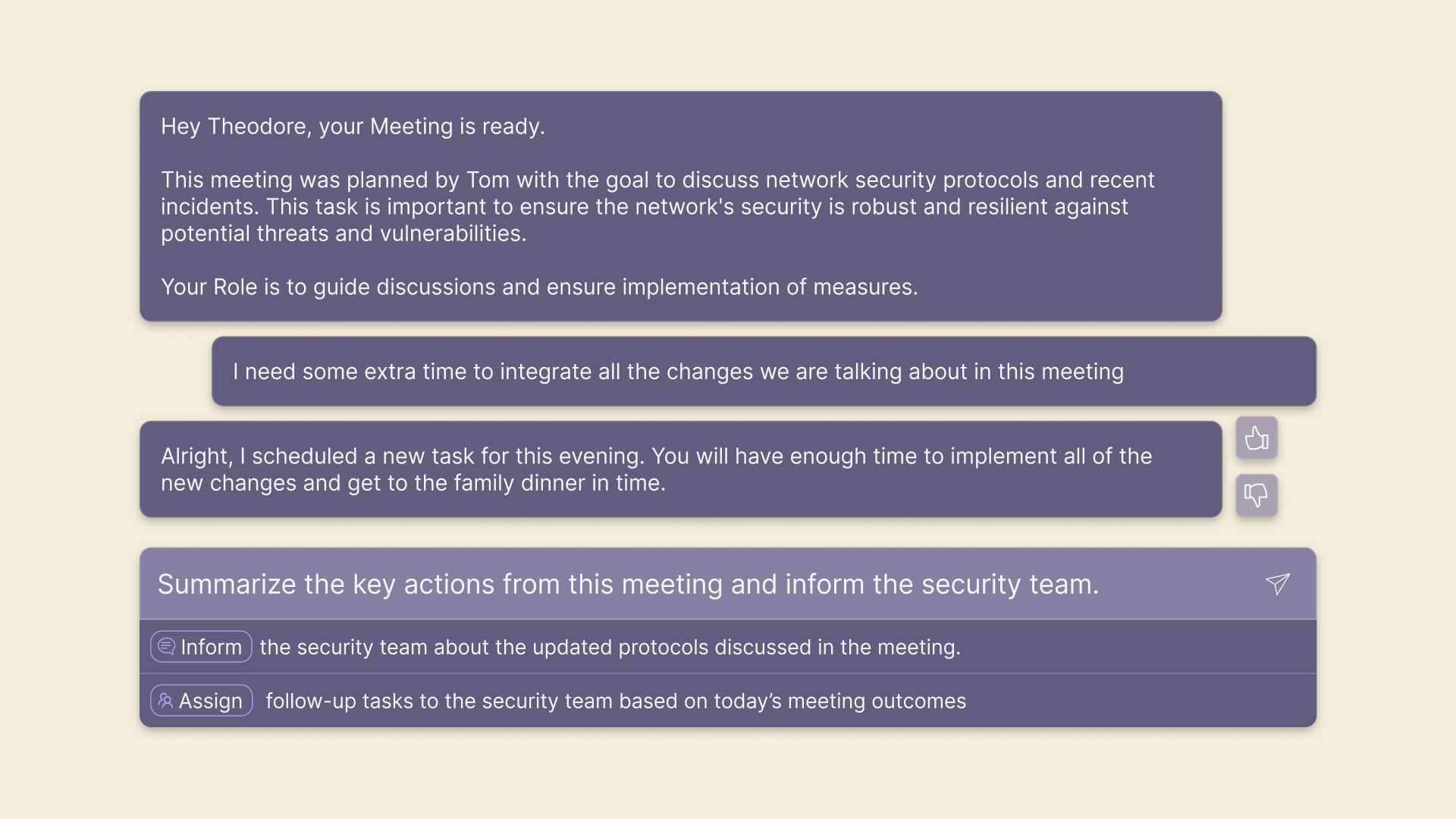

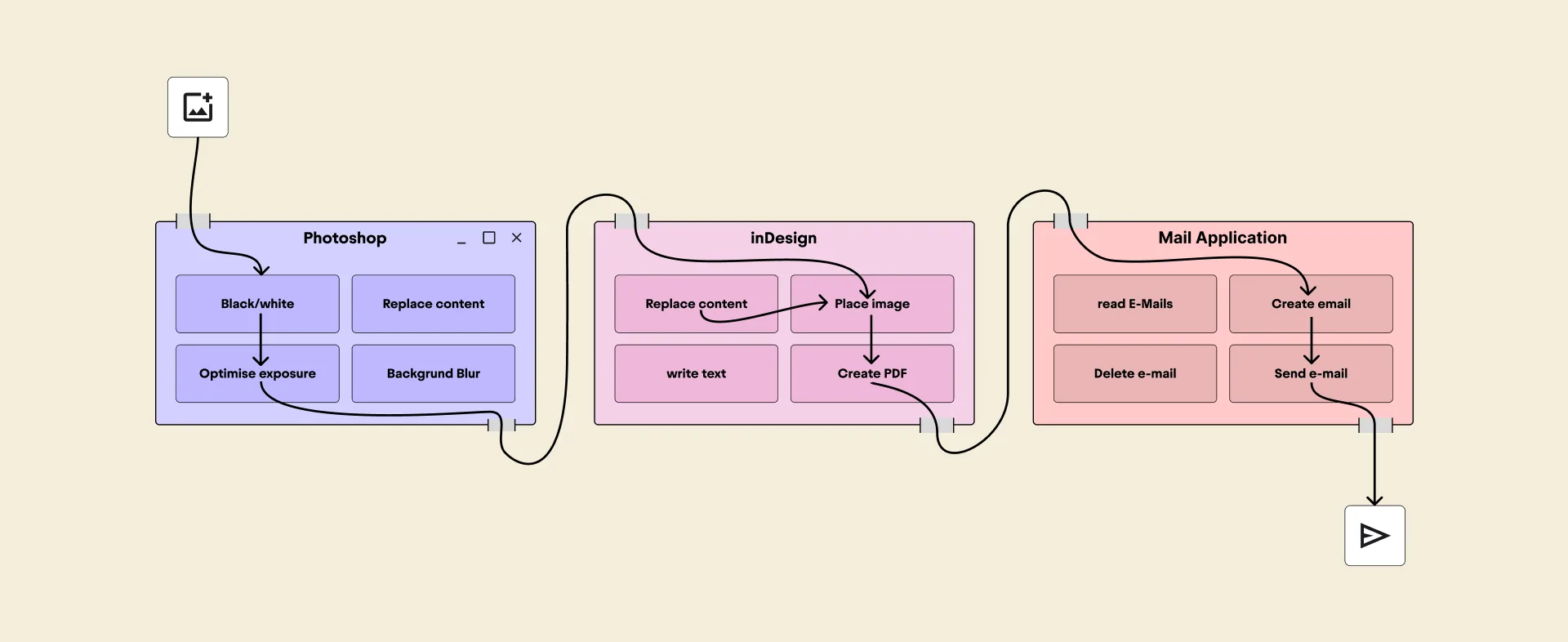

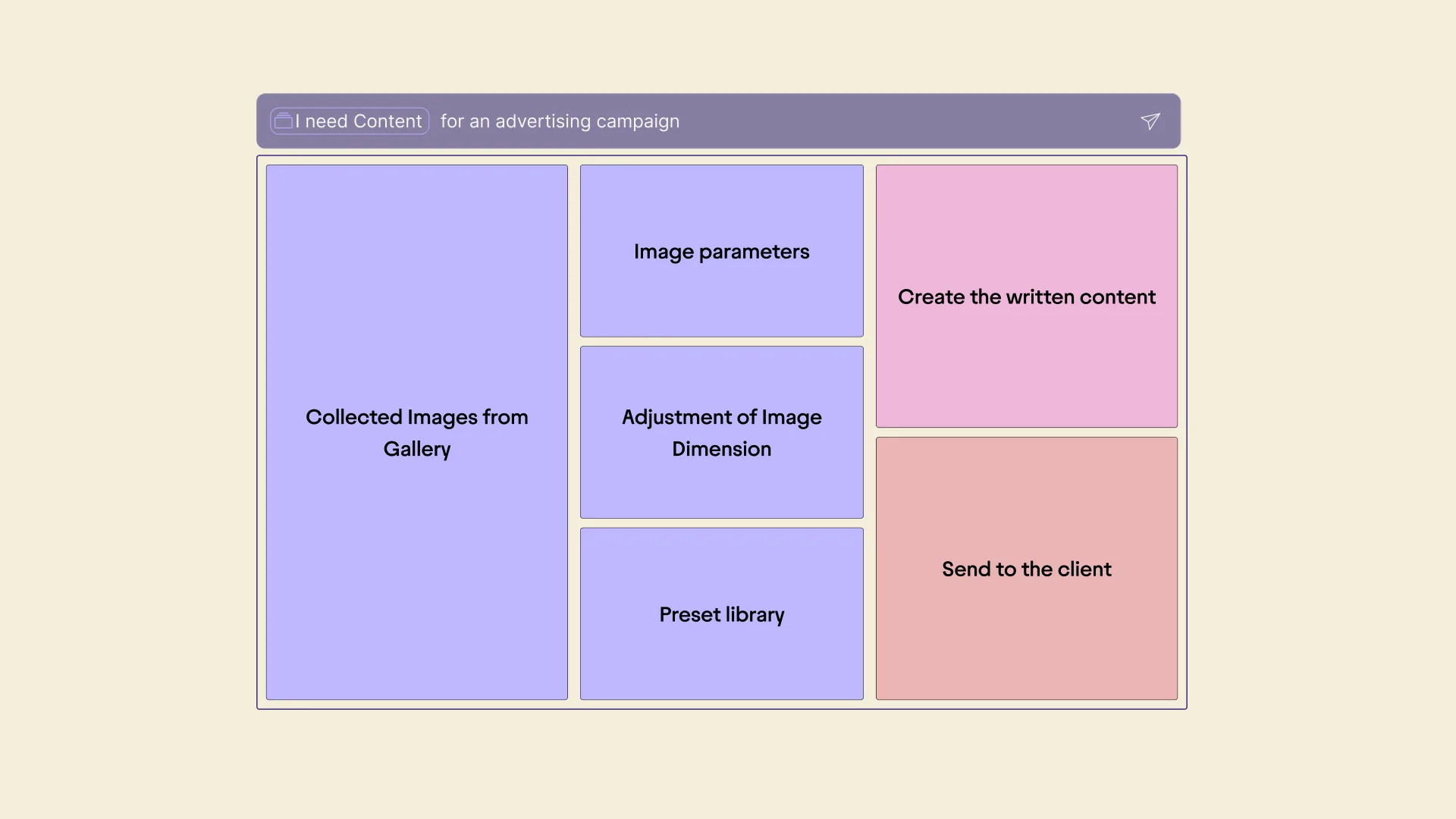

PhAI is a speculative operating system (OS) concept that challenges the legacy of app-based computing. Instead of relying on static containers like files or folders, it builds task-specific interfaces on the fly, based on user intent, context, and workflow. The system replaces app invocation with modular function orchestration, integrates explainable AI, and adapts to the user’s way of thinking. PhAI envisions operating systems as cognitive partners—formed by users, not just used by them—and shifts the burden of adaptation from human to machine.