Emotions are rarely singular. They overlap, combine, and fluctuate, which makes assigning them to predefined categories inherently reductive. Different people perceive emotions differently, and few emotional states can be captured by a single word.

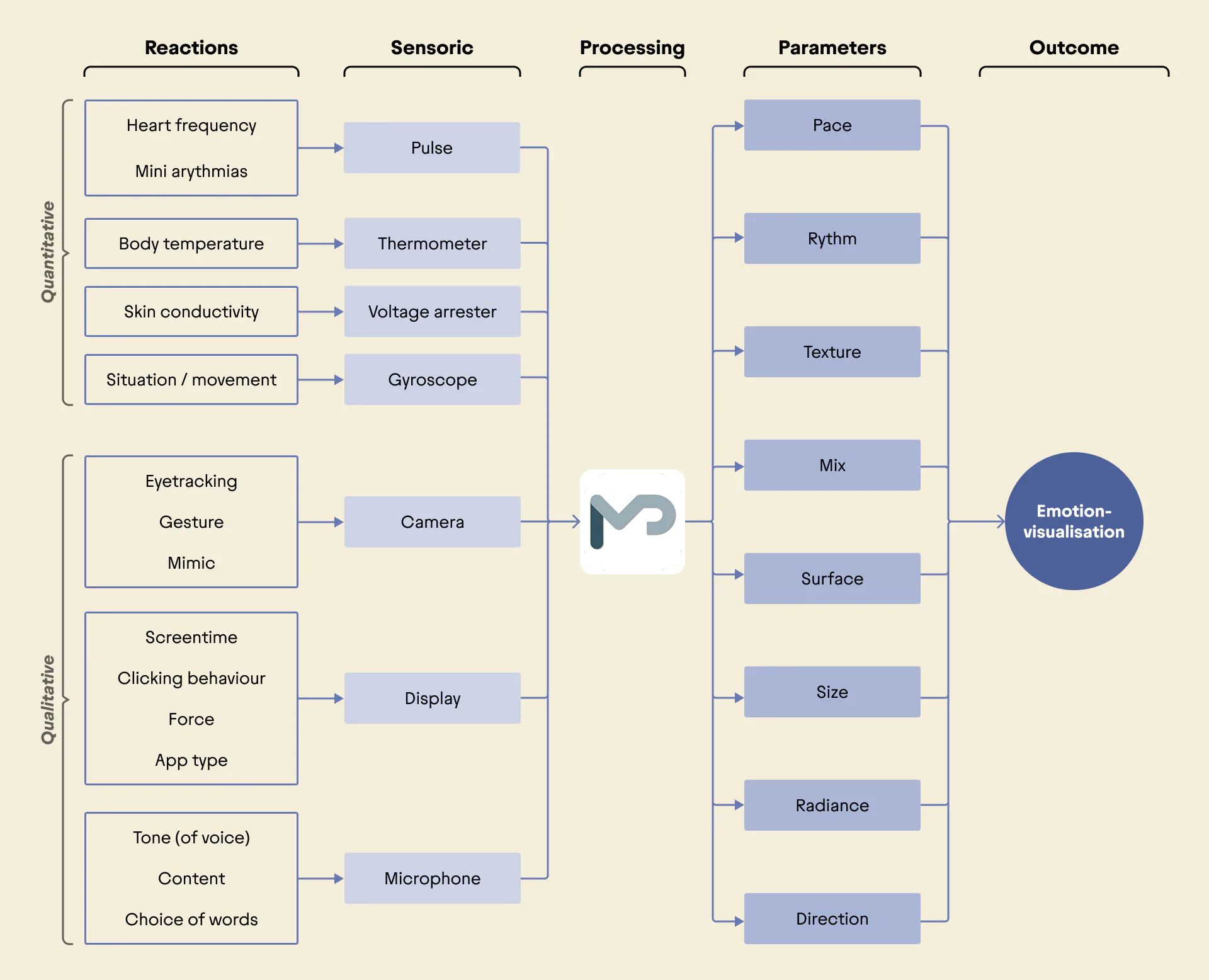

That’s why MPathy avoids naming emotions. Instead, we designed an abstract visual system that reflects intensity, layering, and change without forcing interpretation. The aim is not to define what is felt, but to make felt experience perceptible. Especially when paired with written messages, these visuals reduce ambiguity and make emotional tone more tangible than text alone could.